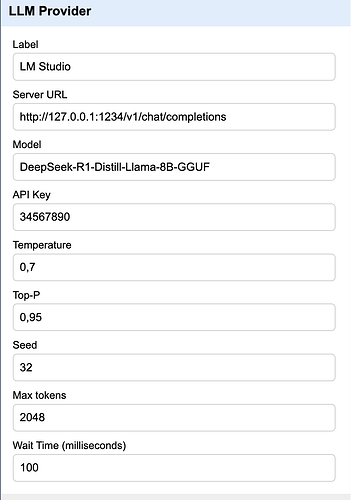

Hi @Michael_Markert, thanks, I tried to use it, but cannot connect it to the LM Studio server (without "seed" value: generic server error, with "seed" value: timeout). I don't want to use remote models.

Hi @colognella

In the LLM Provider definition can you update the value for Temperature and Top-P using period sign, replace 0,7 with 0.7 and 0,95 with 0.95. Check the flow after this change and let know.

-Sunil

Same here, surprisingly. I never tried the LLM extension with StudioLM before and it does not connect while other local (Ollama, llama.cpp) and remote (OpenRouter) connections work fine.

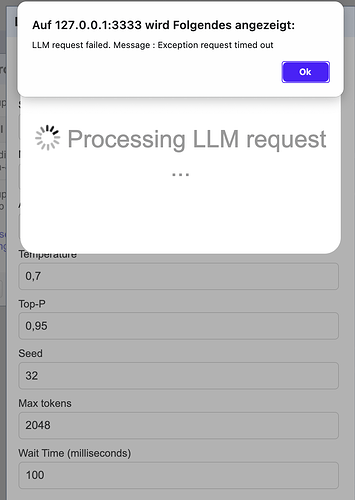

hi @Sunil_Natraj , unfortunately no success …

(same with model name without path)

I fiddled with the settings in LM Studio (CORS, local serving etc.) but to no avail

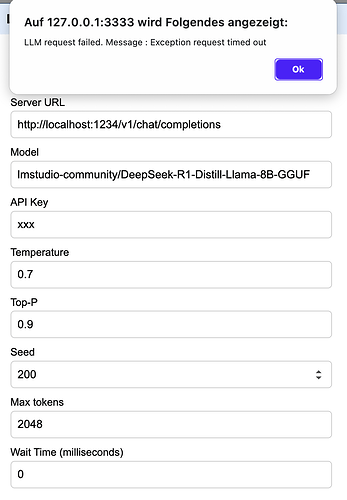

Hi @colognella

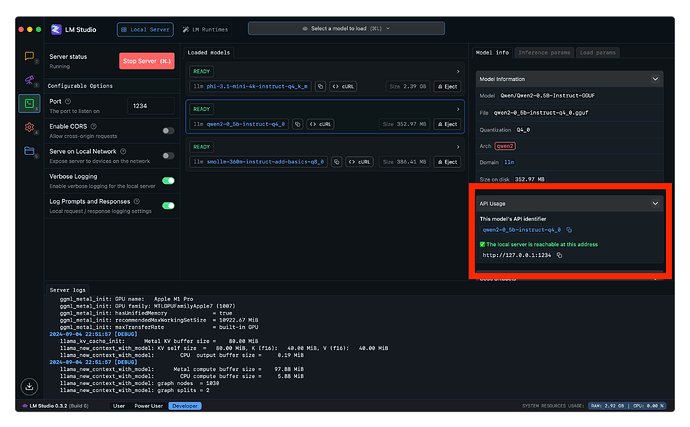

The model name needs to be corrected, in LM Studio for each model there is the API identifier for the model, can you set that. Refer annotation in the attached screen grab.

Hi @Sunil_Natraj , thanks, could you manage to get it running this way? I'm still stuck.

Hi @colognella, LM studio is not supported on my mac (intel) i checked out the documentation of LM Studio and that is how i identified the model ID to be specified. Let me know if you are still running into any issue.

Trying to configure the gemini endpoint, but no success. Tips?

B.

Hi @belfra,

The Gemini AI service currently does not support "seed" parameter, i was able to confirm that if seed parameter is not passed the Gemini AI service works fine. I will update the LLM extension and notifiy when the update is ready.

The API endpoint is - https://generativelanguage.googleapis.com/v1beta/openai/chat/completions

File name is still openrefine-llm-extension-0.1.2.zip

It was a minor update so the release version was not updated.

Thanks Sunil, you are the guy!

B.

Hello, Sunil. Sorry for my limitations, but I still haven't managed to connect with Google's AI. I'd like you to send, please, the settings for the new plugin.

Thank you.

B.

Hi @belfra

I am assuming you have donwloaded and installed the latest version of LLM Extension. Please find below the details for the LLM Provider

- Server URL : https://generativelanguage.googleapis.com/v1beta/openai/chat/completions

- Model: Ensure you have set a valid model name. See screenshot below.

It worked perfectly. Thank you so, so much.

B.

Hey Sunil,

Just wanted to say I finally got an operation for which using your extension in OpenRefine was a fit (I had to monitor closely the results). Works just as expected, and I am very pleased obviously. (Testing the endpoint fails in the setting window, but that’s a detail. The service (OpenAI) works fine.) Might need an upgrade soon, using the new Responses API.

Thanks again!

Thank you @archilecteur

Will keep an eye on the Open AI update